Numerical precision

import matplotlib.pyplot as plt

import numpy as np

For numerical application computers store numbers with a finite precision.

- limited memory available

- limited processing power

This can lead to problems when numbers with large magnitude difference are combined

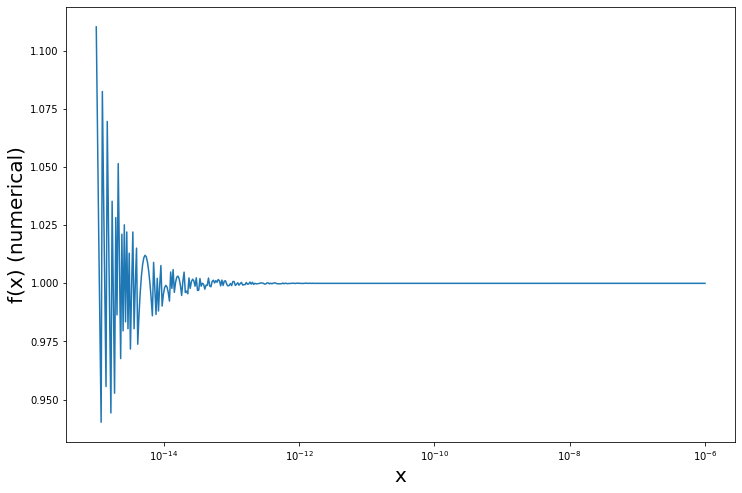

Let’s look at the function

$$f(x)= \frac{(1+x) - 1}{x}=1$$

This should always return 1. But if $x$ is very small we get in trouble when we use this function numerically on a computer.

def f(x):

return ((1.0 + x) - 1.0) / x

plt.figure(figsize=(12,8))

exps = np.linspace(-6,-15,500)

xs = 10.0**(exps)

plt.plot(xs,f(xs))

plt.xscale('log')

plt.xlabel('x',fontsize=20)

plt.ylabel('f(x) (numerical)',fontsize=20);

Explanation

Floating point numbers are stored in the form

$\pm m \cdot 2^e$ where $1\leq m < 2$

Things are easier to visualise in decimal rather than binary representationn. Say our computer works in base ten only have 5 digits precision for $x$ (normally about 16) and

$x=0.0123456789$

the representation would be

$x=1.23457\cdot 10^{-2}$

Let’s start evaluating $1+x$:

$1+0.0123457 = 1.0123457$

which is represented as

$1.01235 \cdot 10^0 $

Now we subtract $1$:

$1.01235 - 1 = 0.01235 $

which is represented as

$1.235\star\star \cdot 10^{-2}$

where the $\star$ have to be made up by the computer. It is done in a deterministic manner but they can’t be the right digits for all calculations.

If we now divide by the original $x$ to get $f(x)$ we get

$\frac{1.235\star\star\cdot 10^{-2}}{1.23457\cdot 10^{-2}} = 1.00\star\star\star$

We can see that instead of $1$ we get some “corruption”. This is not surprising as we would need 7 digits of precision to be able to carry out the calculation without loss.

Numerical errors

- this is a bit of a contorted example

- in practice it is not always an issue

- one has to be careful when considering “small number”

- we will do this very often:

- differentiating

- integrating

- we will need to tune our “small” numbers to be

- small enough for the approximation to hold

- large enough to avoid numerical round-off errors

Testing for equality

there are other pitfalls with numerical precision. The most common is that due to numerical round off errors values that should be equal are not! For example $$ \sum_1^{1000} \frac{1}{1000} \neq 1 $$

x=0.0

for i in range(1000):

x+=0.001

x == 1

False

x

1.0000000000000007

Testing for equality

It is better to:

- use inequalities

- calculate offsets rather than accumulating

xmin = 0

xmax = 1

n = 1000

delta = ( xmax - xmin ) / n

x\_accumulate = 0

for i in range(n):

x\_accumulate += delta # dangerous

for i in range(n):

x\_calculate = xmin + i/float(n) * (xmax - xmin) # better

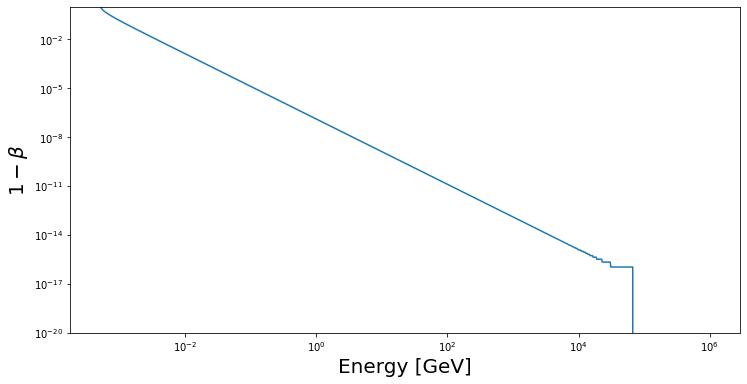

Slightly more Realistic Example

In particle physics we often need the velocity in terms of the speed of light $$\beta = \sqrt{1-m^2/E^2},$$ where $m$ is the particle mass and $E$ its energy in natural units.

We often need to evaluate $$1-\beta,$$ for $E\gg m$ so that $$1-\beta\to \frac{m^2}{2E^2}.$$

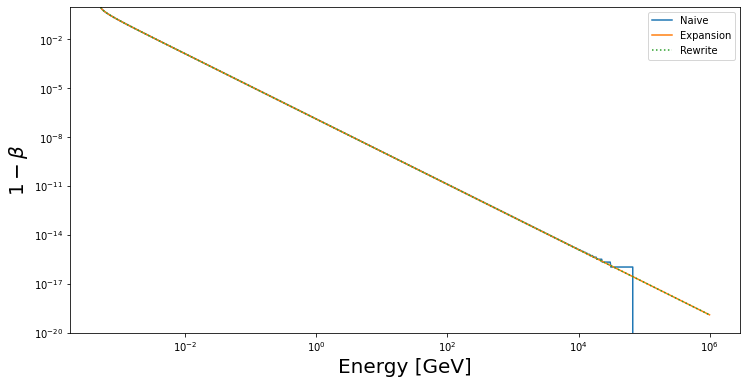

plt.figure(figsize=(12,6))

energy = np.logspace(-3.292,6,1000)

m = 0.51e-3

def oneMinusBeta(x):

return 1.-np.sqrt(1.-m**2/x**2)

plt.plot(energy,oneMinusBeta(energy))

plt.xscale('log')

plt.yscale('log')

plt.ylim([1e-20,1])

plt.xlabel('Energy [GeV]',fontsize=20)

plt.ylabel('$1-\\beta$',fontsize=20);

A more accurate form

Think about how you could rewrite $1-\beta$ in a way which is more numerically stable.

Hint

There’s two ways we can do this

- One which always works is to replace the full expression with a series expansion for small $m/E$

- One trick for this case is to use $1-\beta^2=\frac{m^2}{E^2}$ to rewrite $1-\beta$

Solution

So we could either write

- $1-\beta\approx \frac{m^2}{2E^2}+\frac{m^4}{8E^4}$ valid for $m\ll E$

- $1-\beta = \frac{m^2}{E^2(1+\beta)}$ valid for all $m$

def oneMinusBeta\_exp(x) :

return np.where(x<100.*m,oneMinusBeta(x),

0.5*m**2/x**2+0.125*m**4/x**4)

def oneMinusBeta\_re(x) :

return m**2/x**2/(1.+np.sqrt(1.-m**2/x**2))

plt.figure(figsize=(12,6))

energy = np.logspace(-3.292,6,1000)

m = 0.51e-3

plt.plot(energy,oneMinusBeta(energy),label='Naive')

plt.plot(energy,oneMinusBeta\_exp(energy),label='Expansion')

plt.plot(energy,oneMinusBeta\_re(energy),label='Rewrite',linestyle=':')

plt.xscale('log')

plt.yscale('log')

plt.ylim([1e-20,1])

plt.xlabel('Energy [GeV]',fontsize=20)

plt.legend()

plt.ylabel('$1-\\beta$',fontsize=20);