Optimisation

Most problems involve some sort of optimisation.

- Physics:

- find the ground state of a system

- Lagrange mechanics: find the trajectory that minimizes the action

- Optimize model parameters for a model (minimize prediction error)

- Life:

- which mortgage to pick

- what course to concentrate revisions on

- Business:

- maximize investment

- minimize risk

Given a function

$$f(x_1, x_2, … , x_N)$$

of $N$ parameters, how do I find its minimum or maximum?

- try all values

- not practical in most cases

- very costly computationally

- need to decide on a search grid beforehand.

- analytically

- differentiate and find zero(s)

- not always possible

- numerical methods

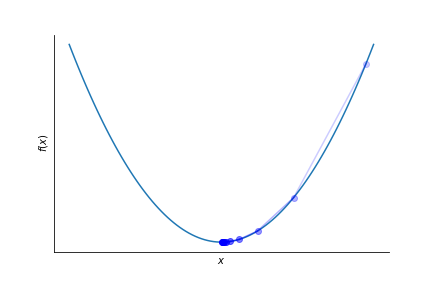

Gradient descent

Gradient descent is used to find the minimum of a function using successive steps until a convergence criteria is obtained.

Algorithm:

- start somewhere

- calculate the gradient at that position

- follow the negative gradient to the next position

- repeat

$$ x_{i+1} = x_i - \eta \nabla f(x_i) $$

$\eta$ is the step size. It has to be chosen carefully.

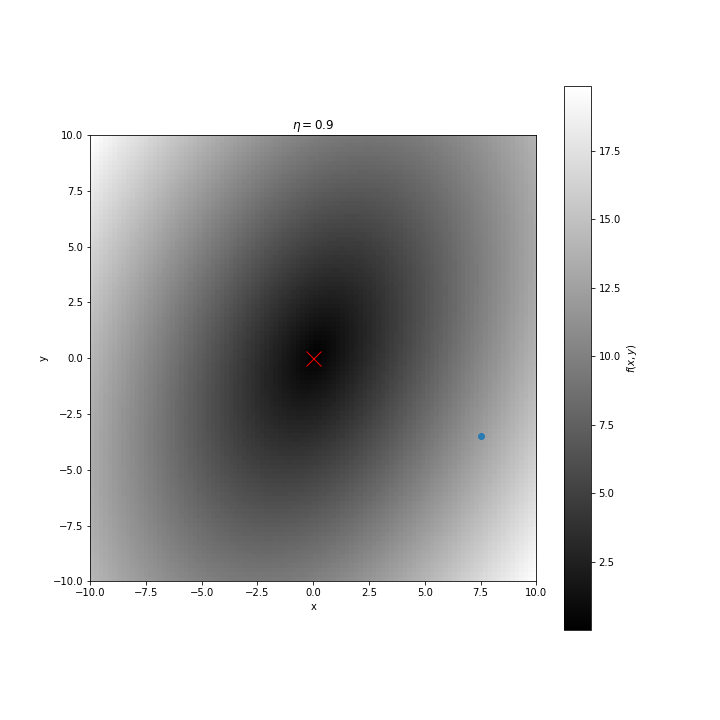

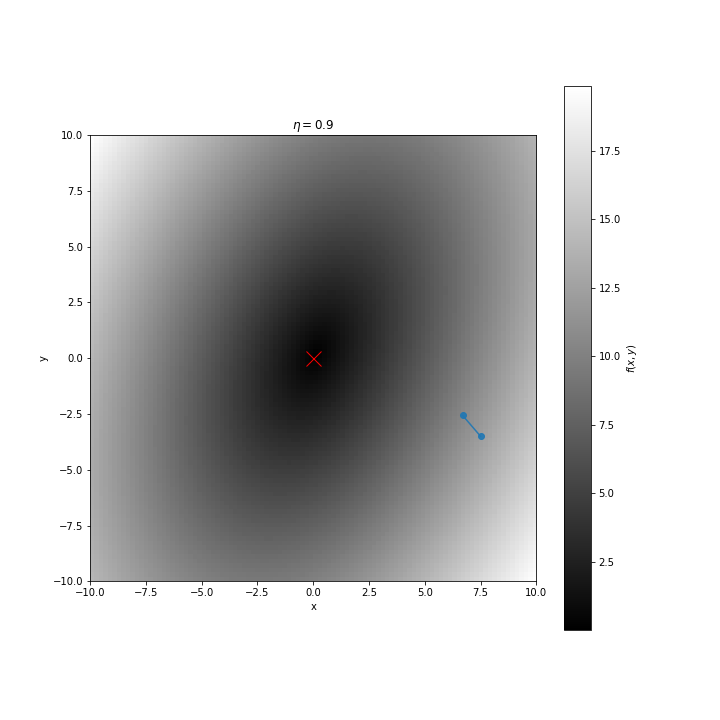

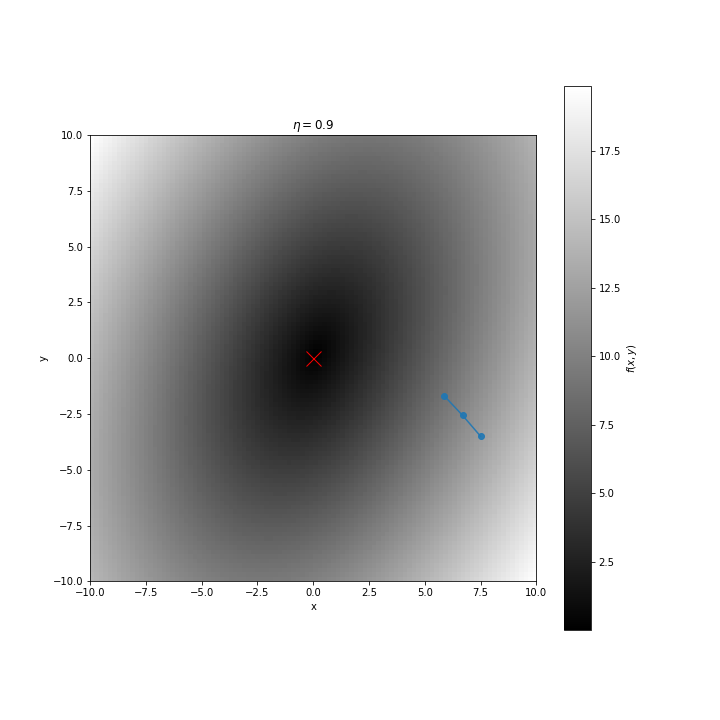

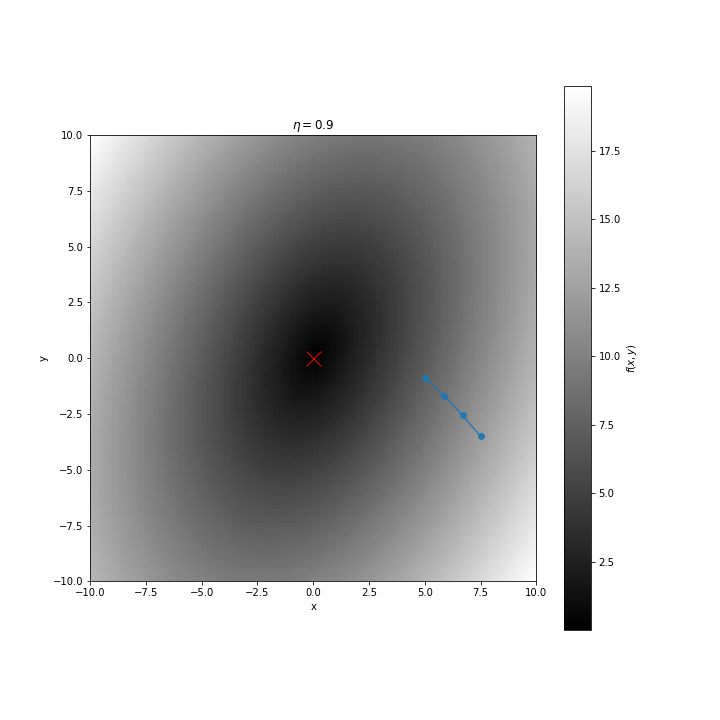

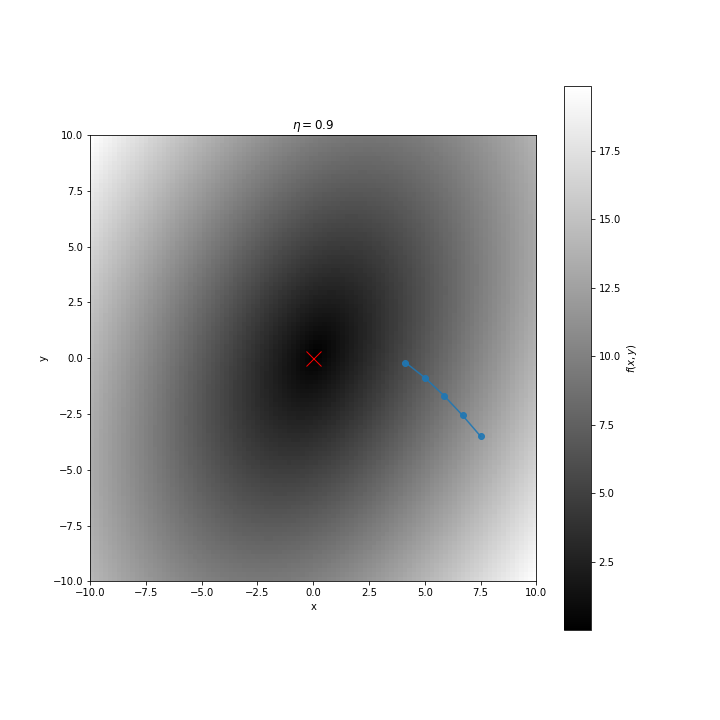

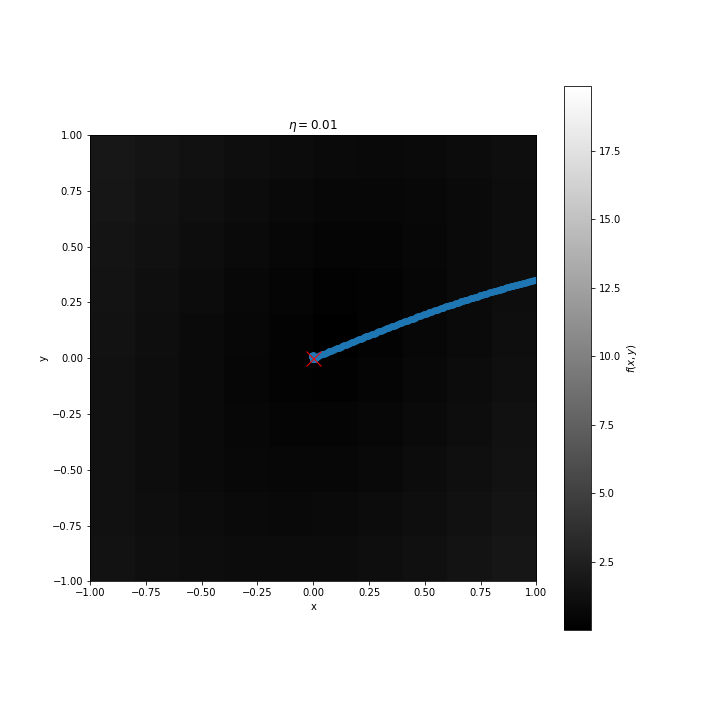

Step size

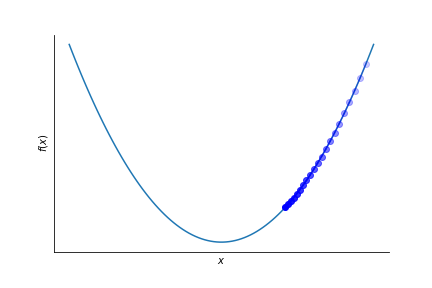

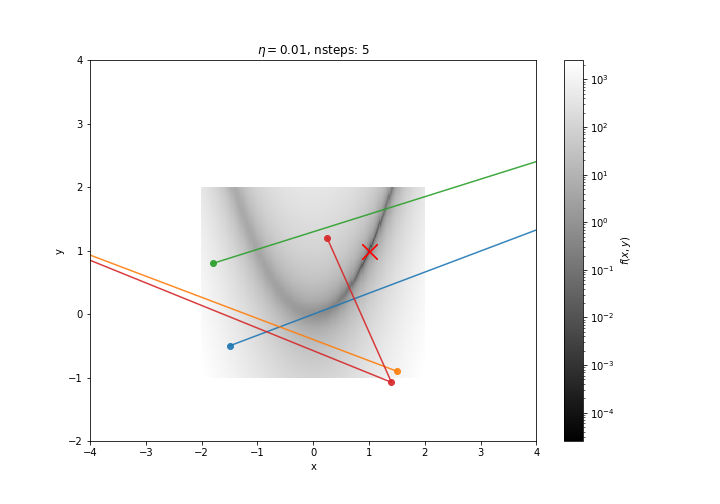

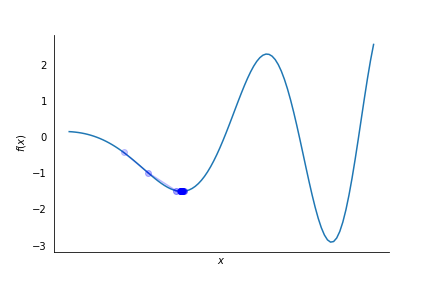

If the step size is too small the convergence is too slow.

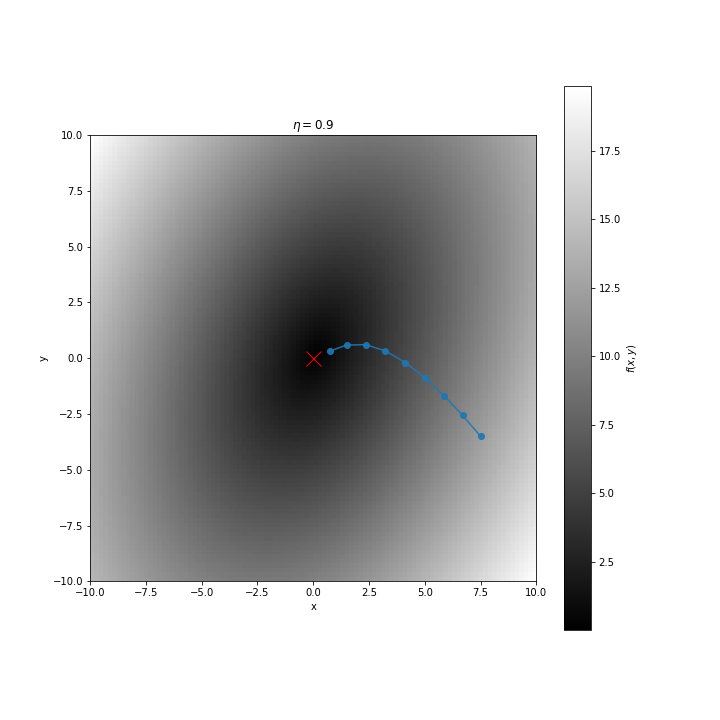

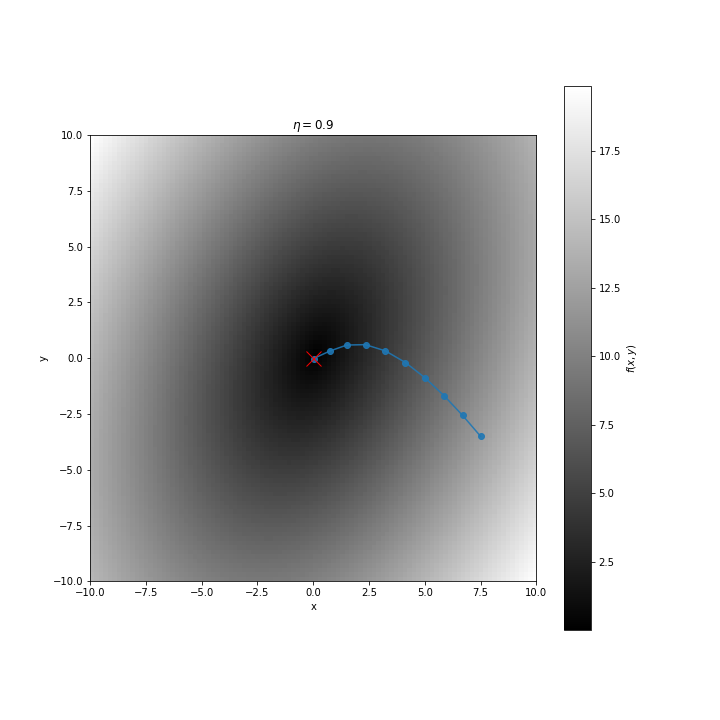

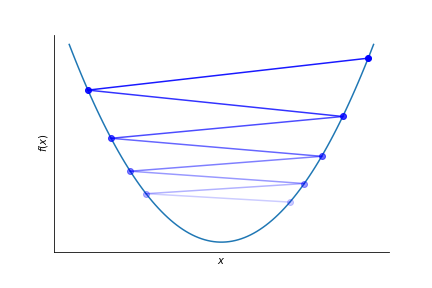

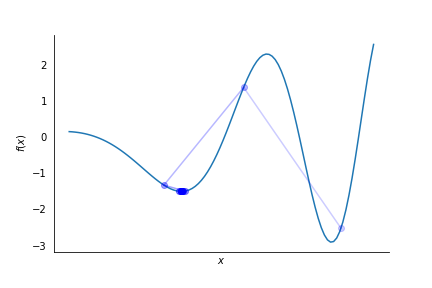

If the step size is too large we can loose convergence altogether!

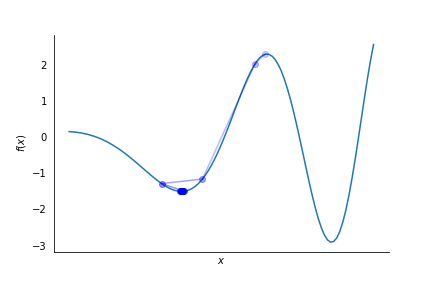

If the step size is about right.

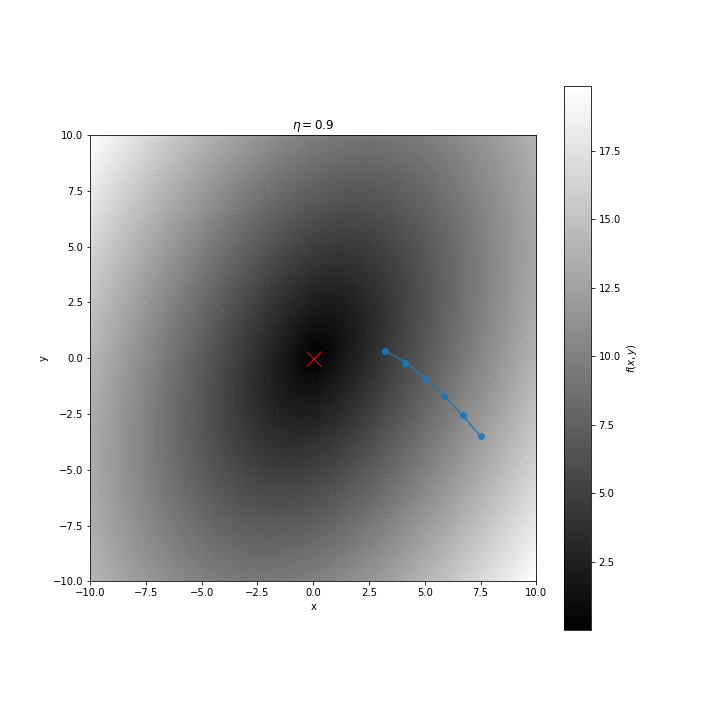

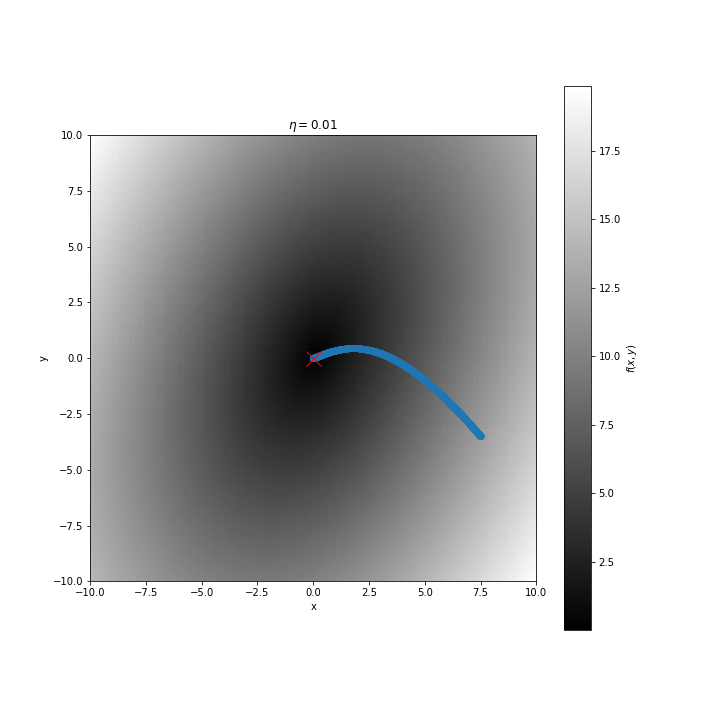

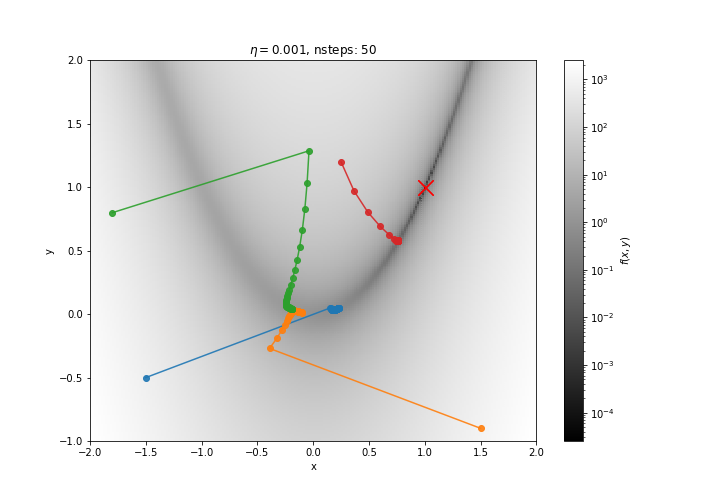

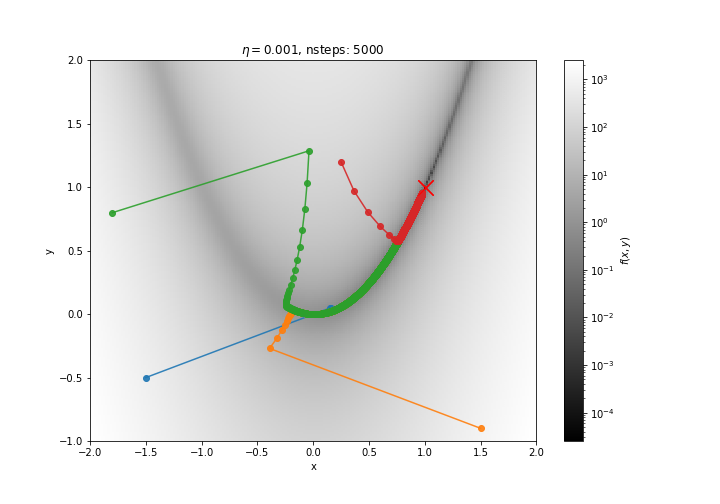

Let’s try a smaller $\eta$.

Looks better…

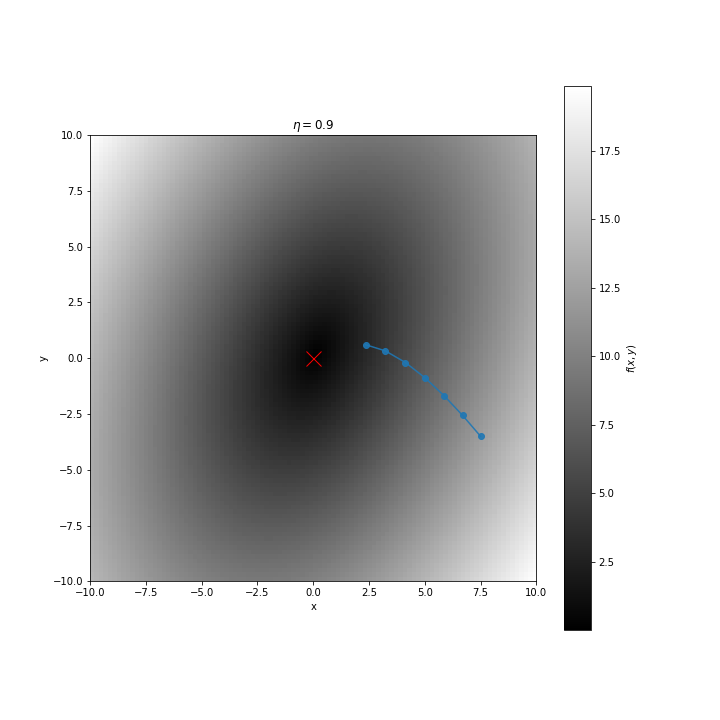

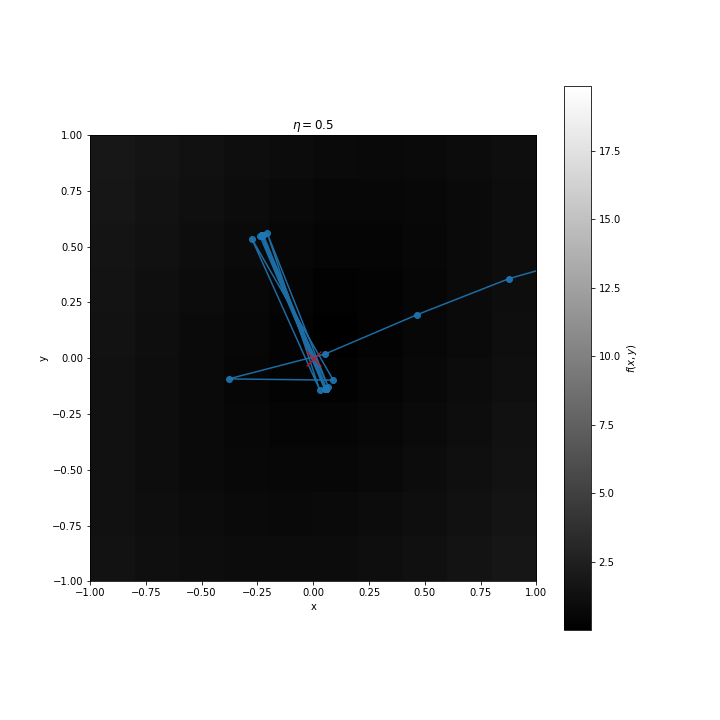

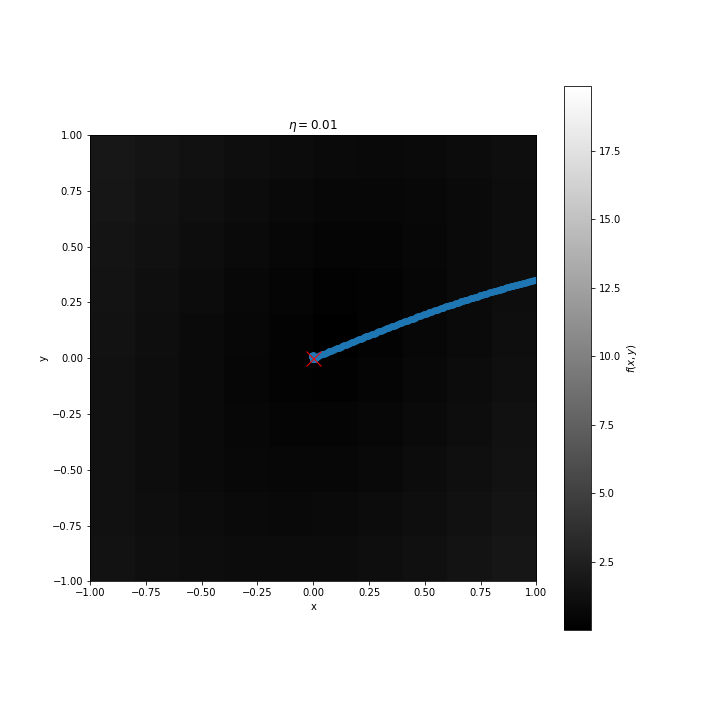

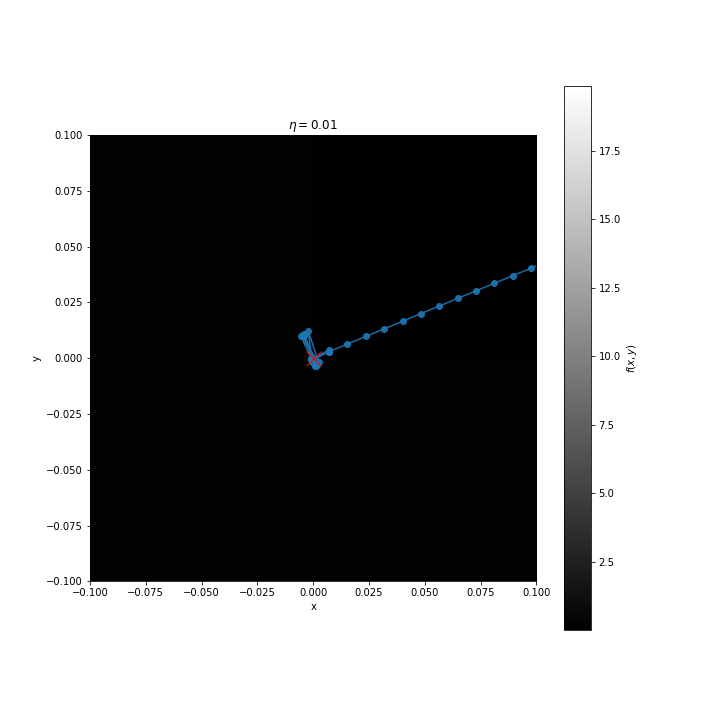

Let’s zoom in more

Still fine…

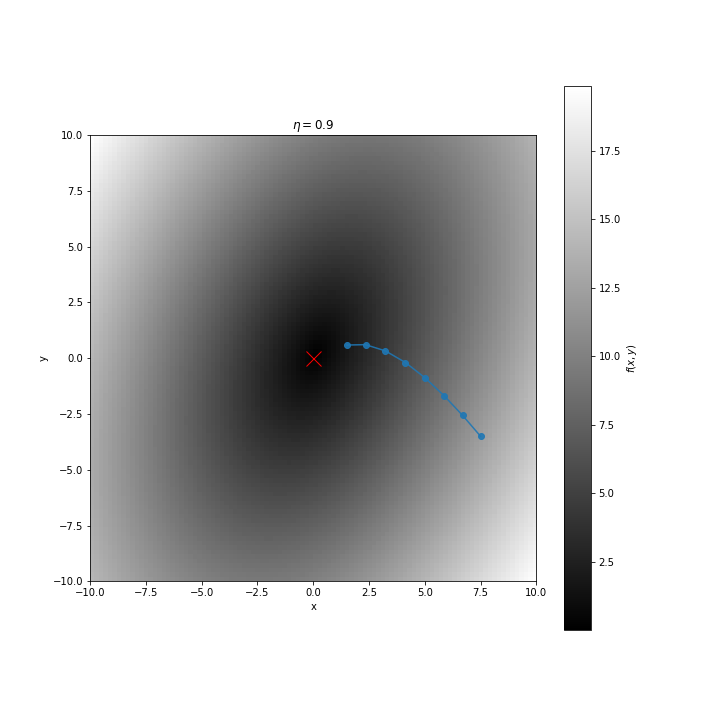

Let’s zoom more…

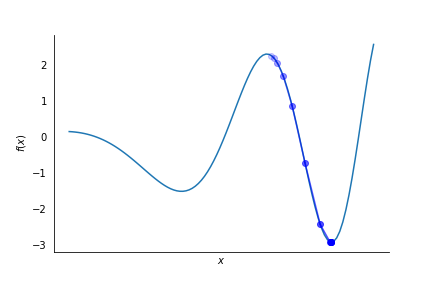

we found oscillations again!

We will always end up oscillating at the bottom of the well. We need a stopping criteria:

- number of steps

- when the value of the function is not changing by much any longer

It is possible to have an adaptive step size: start larger and when we find oscillations we reduce the step size.

Example

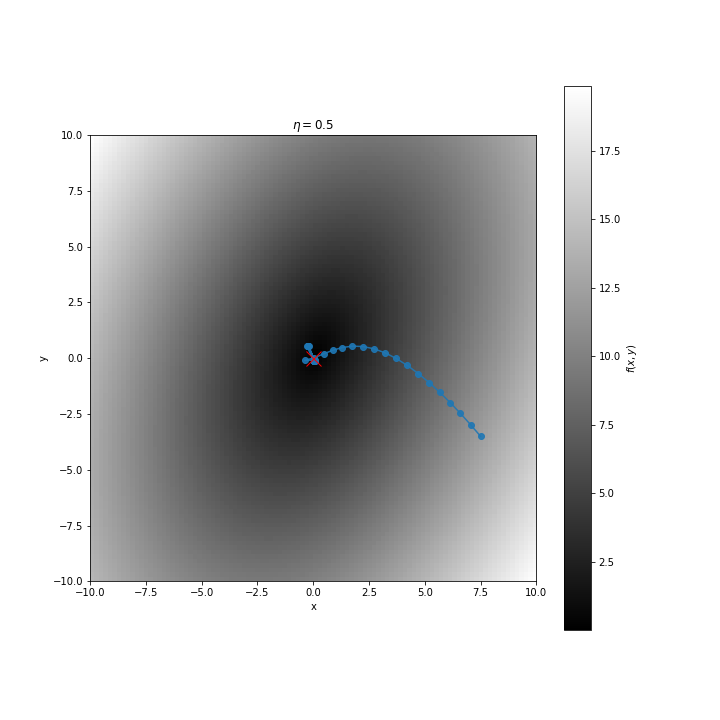

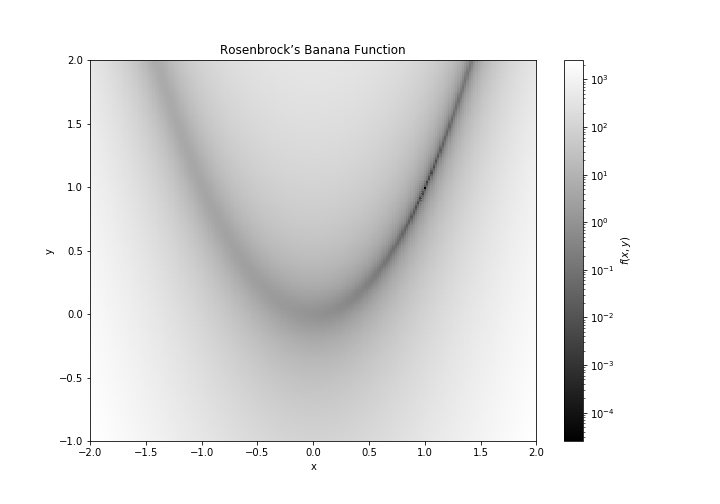

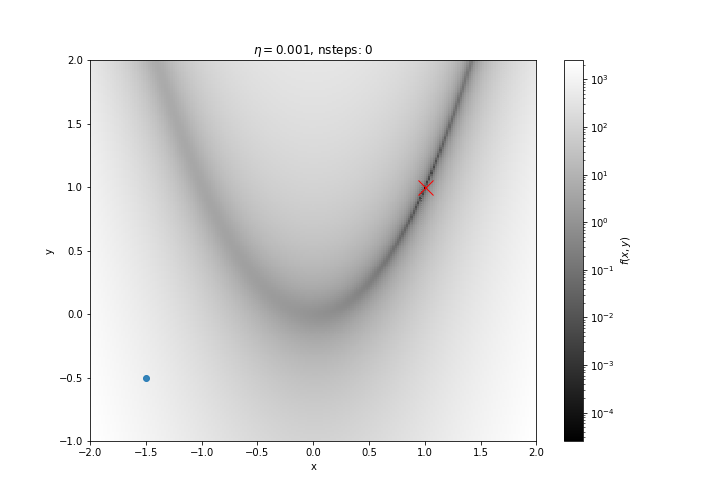

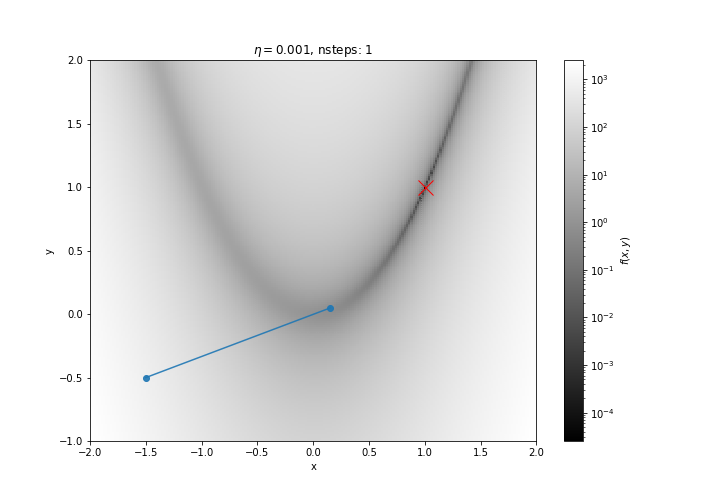

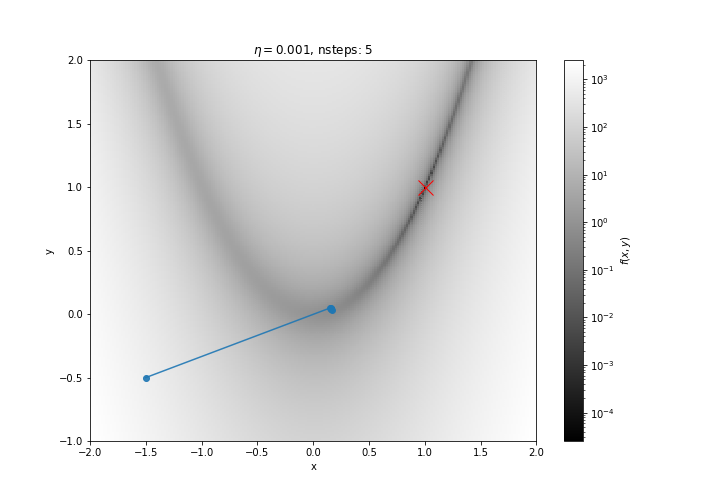

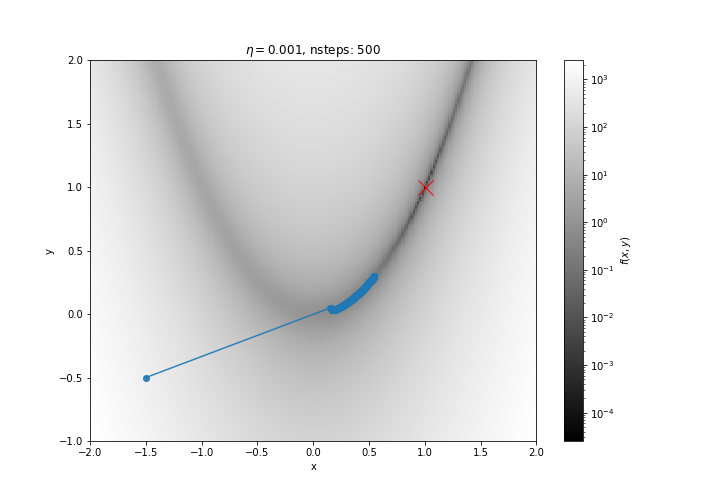

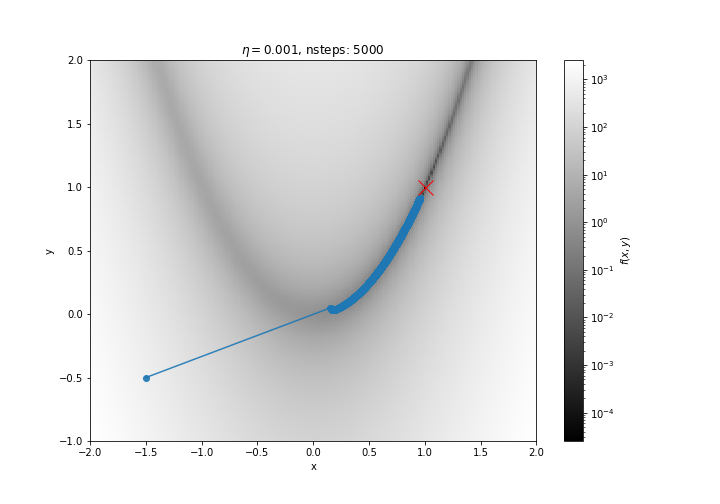

Rosenbrock’s Banana Function

$$ f(x, y) = (1−x)^2 +100\left(y − x^2 \right)^2 $$

A challenging test case for finding minima.

This is a difficult problem because we need the step size to be very small at the beginning, otherwise we diverge:

but then it means we re crawling in the valley.

There are many adaptive methods the change the step size as the algorithm progresses.

Local minima

Gradient descent will converge to the global minimum if it is unique.

It can get stuck in local minima.

There are global methods to find the global minimum in the presence of local minima:

- simulated annealing

- genetic algorithms

- basin-hopping

- …

they are based on stochastic methods.